As the industry strives to enhance cloud infrastructure to increase resource efficiency and security, it starts to look beyond what can be done with traditional means of virtualization. Beyond the horizon, there is WebAssembly – a technology with widespread adoption in all major web browsers. WebAssembly is now becoming a hot topic in the Cloud Native ecosystem, too. The organizers of KubeCon + CloudNativeCon Europe 2022 have dedicated a full-day event just to WebAssembly.

This post will explain the motivation for using WebAssembly for running serverless workloads. I'll take a brief look at what WebAssembly is and which tools currently exist. Finally, I'll describe my proposal for an architecture for running WebAssembly-based serverless workloads.

Introduction to WebAssembly & its use cases

At its essence, WebAssembly (Wasm) is an open standard for cross-platform executables. The spirit is comparable to Java bytecode, but the WebAssembly standard is a lot simpler in that it doesn't define system-level APIs or even complex data types. The Wasm initiative was driven by browser vendors, making it the only executable format for web browsers since the advent of JavaScript in 1995. Wasm is designed to provide near-native execution performance. This is combined with a very well thought-of isolation concept – its main use case is to run semi-trusted code on arbitrary web pages, after all.

The bare-bones nature of the Wasm standard is a blessing and a curse. On the one hand it makes Wasm as versatile as a standard as it is and is the reason why it is applicable for running code on web browsers and the cloud alike. The downside is the lack of common programming APIs: e.g., the WebAssembly standard does not specify how to open and read a file or open a network connection. The WebAssembly System Interface (WASI) project contributes this common denominator for the most important use cases to communicate with the outside world from a Wasm module. Note that WASI is still evolving: as of writing this post, you can read and write a file, but not use sockets for network communication, for example.

⚙️ Modern Runtimes for Cloud Computing Workloads

- Hypervisor VM and microVMs (e.g., AWS Firecracker)

- Application Containers (e.g., Docker)

- High-level language VMs (e.g., JVM, Python, V8, WebAssembly)

This combination of performance with a high level of isolation drives the interest of Wasm in the cloud-native community. The same way that Docker containers superseded Virtual Machines as the most popular means to separate tenants in shared computing environments, Wasm could present the next step for using hardware resources even more economically. This not only applies to the cloud and edge. For constrained devices, such as those in the Internet of Things (IoT), Wasm could be a breakthrough for application isolation, since even the comparably low overhead of Docker containers presents a challenge for such devices.

Wasm browser support is good, but for which headless use cases has it been used so far? In my research, I came across a few:

- Proprietary Function-as-a-Service (FaaS, "serverless") providers: CloudFlare Workers, Fastly Compute@Edge

- Language-agnostic application-level logic: Envoy HTTP filters, Kubewarden policies

- Open-source application platforms: wasmCloud, Vino

- Kubernetes ecosystem:

- Krustlet implements the Kubelet API and runs Wasm modules as pods (see deislabs/wagi for a simple Wasm-backed HTTP handler)

- WasmEdge is a versatile WebAssembly runtime that can be integrated into existing programs and can also be used as a container runtime

- wasmCloud is an Actor model implementation for distributed Wasm-based apps

- Public cloud: WASI node pools on Microsoft Azure AKS (based on the Krustlet project)

- Distributed systems research: Faasm

Below is a list of useful learning resources on WebAssembly:

- The blog post series on the Mozilla blog by @linclark does a great job of explaining the core concepts. Also true for the post on WASI.

- Read “WebAssembly meets Kubernetes with Krustlet” for another take on the motivation for Wasm on Kubernetes and how to test-drive Wasm modules.

A Serverless WebAssembly Runtime for Cloud-Native Workflow Engines

For my master thesis in IT-Systems Engineering at the Hasso-Plattner-Institute in Potsdam, I want to bring the efficiency & security benefits of WebAssembly to the CNCF ecosystem in the form of a Wasm runtime for serverless workloads on Kubernetes. The primary use case are workflow tasks in a distributed workflow orchestrator like Kubernetes and Argo Workflows.

The main pillars for this project are derived straight-forward from the working title:

- Serverless: The runtime must scale well within a cluster of distributed machines. The end-user can call WebAssembly-based remote functions just like in-process ones, and the runtime implementation must take care of scaling, fault handling, etc.

- Cloud-Native: We decided to focus on Kubernetes and the “Cloud-Native” ecosystem because of its popularity and open-source approach.

- Workflow Engine: The runtime will integrate seamlessly with state-of-the-art workflow engines without them having to know implementation-specifics of WebAssembly modules and their distributed execution.

I already explained the benefits of WebAssembly modules compared to Docker containers briefly. Wasm modules can be instantiated in a matter of milliseconds and are a lot smaller than container images. Moreover, the runtime improves cluster resource efficiency because it removes both the container management and runtime overhead. It is also a promising approach to run untrusted code in a distributed setting as the Wasm sandbox can enforce strong security guarantees.

📄 Find out more on the thesis topic in my topic proposal document:

Outlook: WebAssembly Modules in Argo Workflows

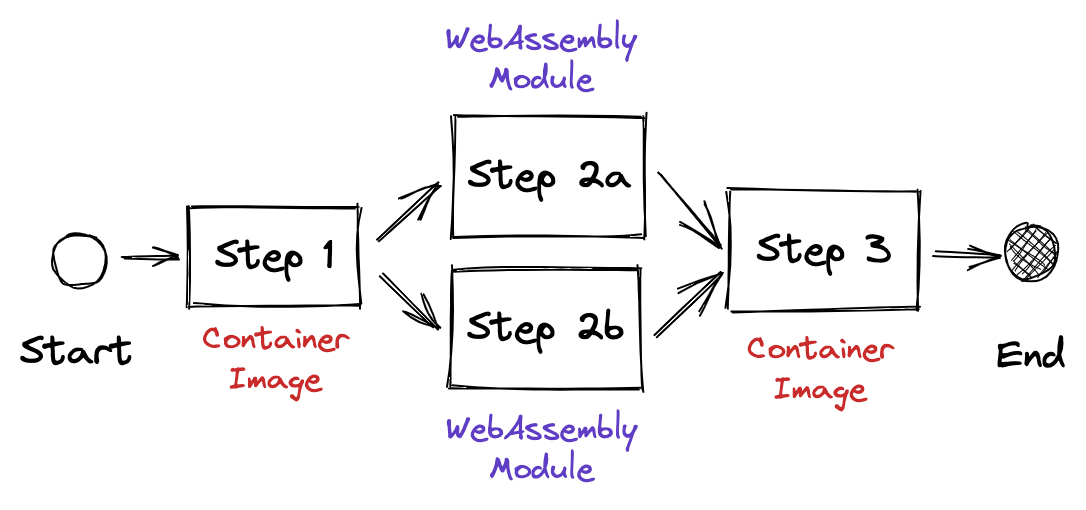

The graphic shown below is an example for a workflow with three steps. Assume this workflow is to be executed in a Kubernetes cluster. For each workflow step (1, 2a, 2b, and 3) there would normally be a Pod with one or more containers. One of the containers is implements the custom logic and is supplied by the workflow author. For this setting, I will replace containers with Wasm modules. Both will be interoperable such that they can be combined seamlessly.

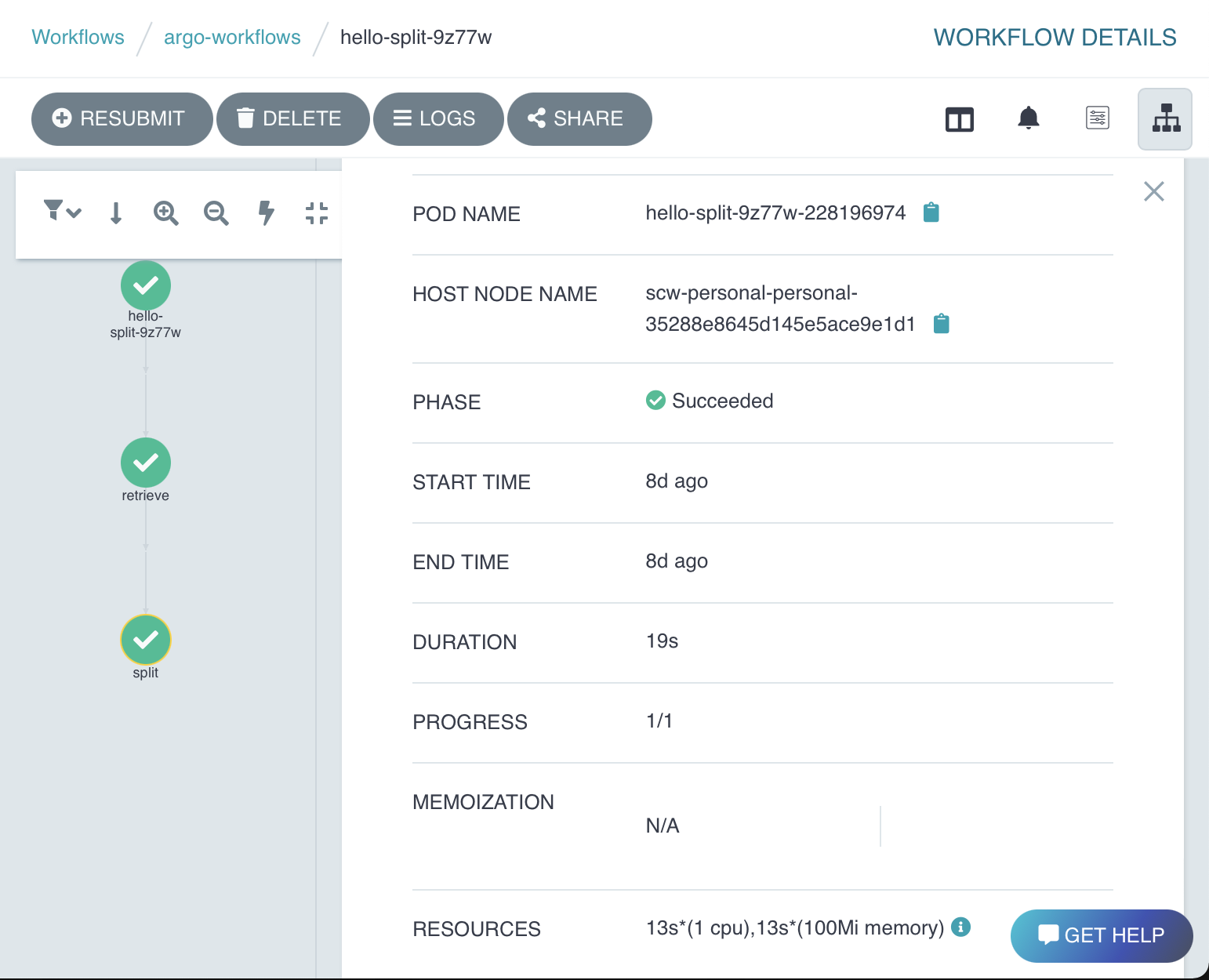

I will use Argo Workflows as a showcase for the serverless Wasm runtime. The integration supports the same configuration for input and output parameters and artifacts. It is fault-tolerant and allows workflow steps to be debugged with the same tools in the Argo Workflows UI and Kubernetes resources. See the screenshot on the right to get a picture of how this will look like in Argo Workflows. Take a look at this snippet from an Argo Workflow definition. This is the existing implementation using a Docker container image:

- name: split_pages

inputs:

artifacts:

- name: doc

path: /tmp/doc

script:

image: ghcr.io/shark/letterflow-imagick

command: ['/tmp/doc','/tmp/pages']

outputs:

artifacts:

- name: pages

path: /tmp/pagesNext, this is how a Wasm module in a workflow step template can be used:

- name: split_pages

inputs:

artifacts:

- name: doc

path: /tmp/doc

wasm: # <- the difference is here

# references WASM module in an OCI registry

image: ghcr.io/shark/letterflow-imagick-wasm

command: ['/tmp/doc','/tmp/pages']

outputs:

artifacts:

- name: pages

path: /tmp/pagesThe wasm: key is the only necessary change from an end-user perspective.

The implementation point of view is more complex, of course. Part 2 will cover the system architecture for the serverless Wasm runtime.