This post continues my series on a serverless WebAssembly-based runtime for workflows. If you’re new to my project, I suggest beginning with “Part 1: Motivation”.

WebAssembly provides a new means to run workloads in the browser and the cloud. It claims to bring reduced runtime overhead, improved resource utilization, and better security compared to Docker containers. My goal is to combine WebAssembly with Kubernetes. I will extend Argo Workflows such that it can run workflow tasks as a WebAssembly (Wasm) module. Currently, Argo Workflows spawns a new Docker container for every workflow task. My project allows the developer to opt into providing WebAssembly modules for some or all of the workflow tasks.

Refer to my previous post for an introduction to Argo Workflows. Here, I will describe what conceptually happens behind the scenes when a workflow task executes. For each step in the process, I’ll outline the design decisions. Last, I’ll present first ideas about how to evaluate and benchmark container-based and Wasm-based workflows to find out whether the assumptions hold true.

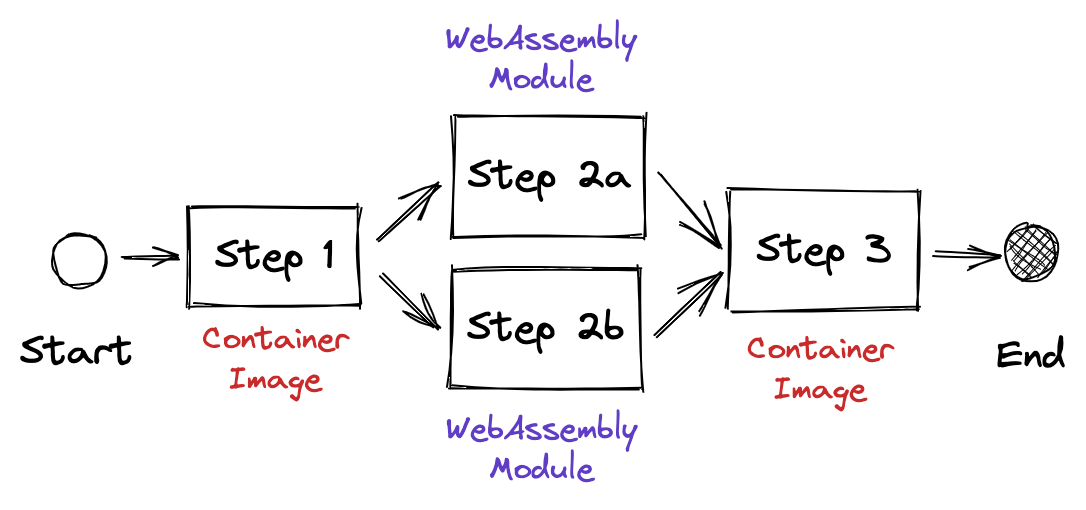

Let’s review the sample workflow again:

We’re mainly interested in steps 2a and 2b as those are concerned with Wasm The sequence diagram below visualizes the necessary steps to execute a Wasm-backed workflow task. The boxes with numbers represent system design questions. These are outlined in the next paragraphs in more detail.

Diagram Source

sequenceDiagram

participant u as User

participant argo as Argo Workflows

participant k8s as Kubernetes

participant node as WASM Node

u->>argo: Submit Workflow

loop For each task

Note right of argo: ① Level of Abstraction for Workloads

argo->>k8s: Create Job

Note right of node: ② Kubelet or OCI Runtime

k8s->>node: Create & Schedule Pod

activate node

Note right of node: ③ K8s Resource Spec

node->>node: Prepare inputs

Note right of node: ④ WASM Runtime

Note right of node: ⑤ Module Packaging

Note right of node: ⑥ WASM Interface Definition

node->>node: Execute WASM module

node->>node: Persist outputs

node->>k8s: Set Pod status to "Succeeded"

deactivate node

k8s->>k8s: Set Job status to "Succeeded"

k8s->>argo: Notify Job succeeded

end

argo->>u: Submit Workflow ResultsSystem Design Questions

① Level of Abstraction for Workloads

This question is about responsibility for error handling and retries. My current understanding is that Argo Workflows manages workflow task workloads on the Pod level, meaning it is responsible for monitoring (and restarting) the Pods. This allows fine-grained control but ties the execution logic to the workflow engine. On the other hand, Kubernetes already knows the concept of a Job, which is a generic implementation for running a given Pod.

The Wasm runtime should not delegate too much logic to the integration (into the workflow engine, in this case) for it to be reusable in related scenarios.

② Kubelet or OCI Runtime

There are two strategies for adding a Kubernetes Node with a Wasm runtime to a cluster. One is to implement the full Kubelet API, which is more complex but leaves full control over all features in the Pod lifecycle. The other option is to provide a Wasm-backed OCI runtime and use Kubernetes’ Kubelet and an off-the-shelf CRI runtime. This is discussed in-depth in the second part.

Given the other requirements and constraints, all limitations of an OCI implementation should be carefully checked. For the Wasm runtime to provide a developer-friendly interface, some custom Kubernetes resource specs could be necessary. This is what an OCI implementation would not allow for.

③ Kubernetes Custom Resource Specification

Each workflow task has input and output parameters and artifacts. While parameter representation in Kubernetes is straightforward (they are string values), artifacts are BLOBs on S3-compatible storage. They should be made available to the Wasm module without having to download them beforehand. Moreover, the Wasm runtime must handle authentication to the S3 storage on behalf of the module.

④ Wasm Runtime

The Wasm runtime provides the execution environment for the WASM modules. For this use case, they will be used as a library that is embedded into the Wasm-based Kubernetes node (see ②). Currently, there are five popular Wasm runtimes: wasmtime, wasmer, WAVM, wasm3, and WasmEdge.

I already compared the runtimes – more on this is to follow. There are two favorable options:

- wasmtime is already integrated with Krustlet, but lacks APIs for advanced use cases such as interfacing with external systems (e.g., SQL databases, HTTP or KV stores)

- WasmEdge provides richer APIs but is not native to Rust and is less mature

Moreover, the wasmCloud project features the concept of capabilities and capability providers, which allows accessing other APIs over HTTP, connecting to a database, etc.

Bringing these approaches together, thus providing a unified interface for external capabilities, would be a huge advantage. This is because it’d simplify writing Wasm modules that interface with external services, which is a very common requirement in practice.

⑤ Module Packaging

OCI or Bindle?

I have found three packaging formats for headless Wasm modules:

- Krustlet stores Wasm modules in any OCI-compliant registry (”Docker registry”). This method is the most compatible with container images. Note that this method just uses the same registry to store the artifacts, the images themselves are not Docker-compatible. You must use a tool like

wasm-to-ocito interact with the registry. - crun is an OCI runtime that can execute Wasm modules natively using WasmEdge. Because it is an OCI runtime, image handling (i.e., pulling from the registry) is handled on an upper layer by the CRI runtime. Thus, crun’s images are Docker-compatible. They are container images whose command point to a Wasm module.

- Hippo is an open-source PaaS project for HTTP services. The HTTP handler logic is implemented as WebAssembly modules. They introduced a novel packaging format called Bindle which they use for artifact distribution. Conceptually, Bindle seems like a well-suited format for packaging Wasm-based applications together with their dependencies. The flipside is that the format is not widely adopted.

⑥ Wasm Interface Definition

The Wasm runtime must define a contract as to how it expects the Wasm module to be invoked. The most important question is how input parameters and artifacts are made available to the module and how it can return output parameters and artifacts.

WebAssembly Interface Types (WIT) is the to-be-standardized method for providing a language-agnostic interface definition for Wasm modules and their hosts. Yet, standardization is in progress, and tooling support is not fully there, yet.

The WebAssembly Procedure Calls (waPC) project seems older (and thus, maybe more mature) but lacks industry support.

Evaluation

The implementation must be evaluated with regard to its design requirements. Those are described in the first post.

Qualitative Evaluation

- Is the architecture aligned with Kubernetes’ design goals (i.e., control loops, CRDs, events)?

- From a conceptual level, can the Wasm runtime be scaled like any other Kubernetes runtime (i.e., by adding nodes)?

- Does the runtime integrate seamlessly with Argo Workflows? More specifically, this involves the number of necessary changes to a workflow design and whether all features of container-based tasks work (e.g., logs, exit status).

Quantitative Evaluation

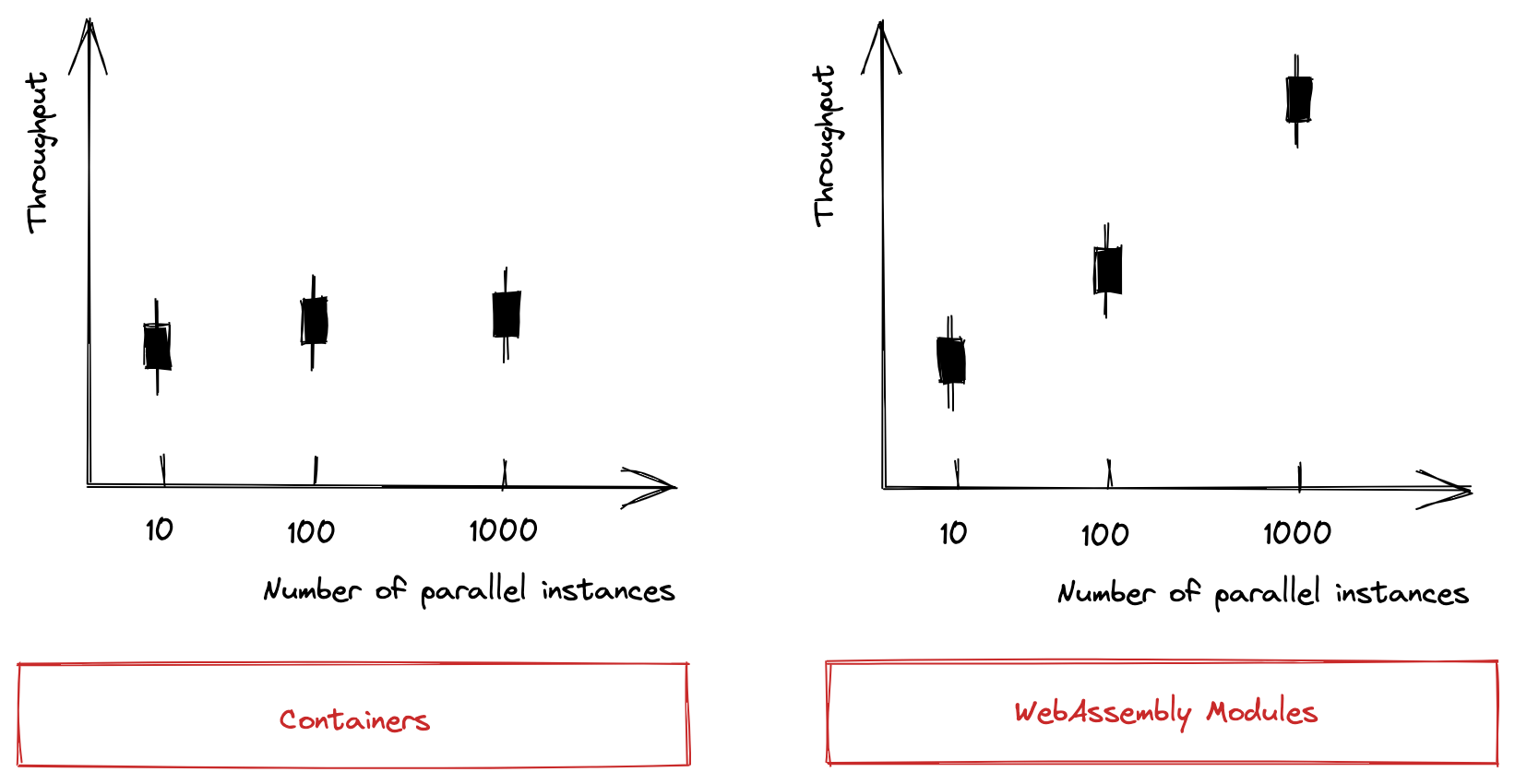

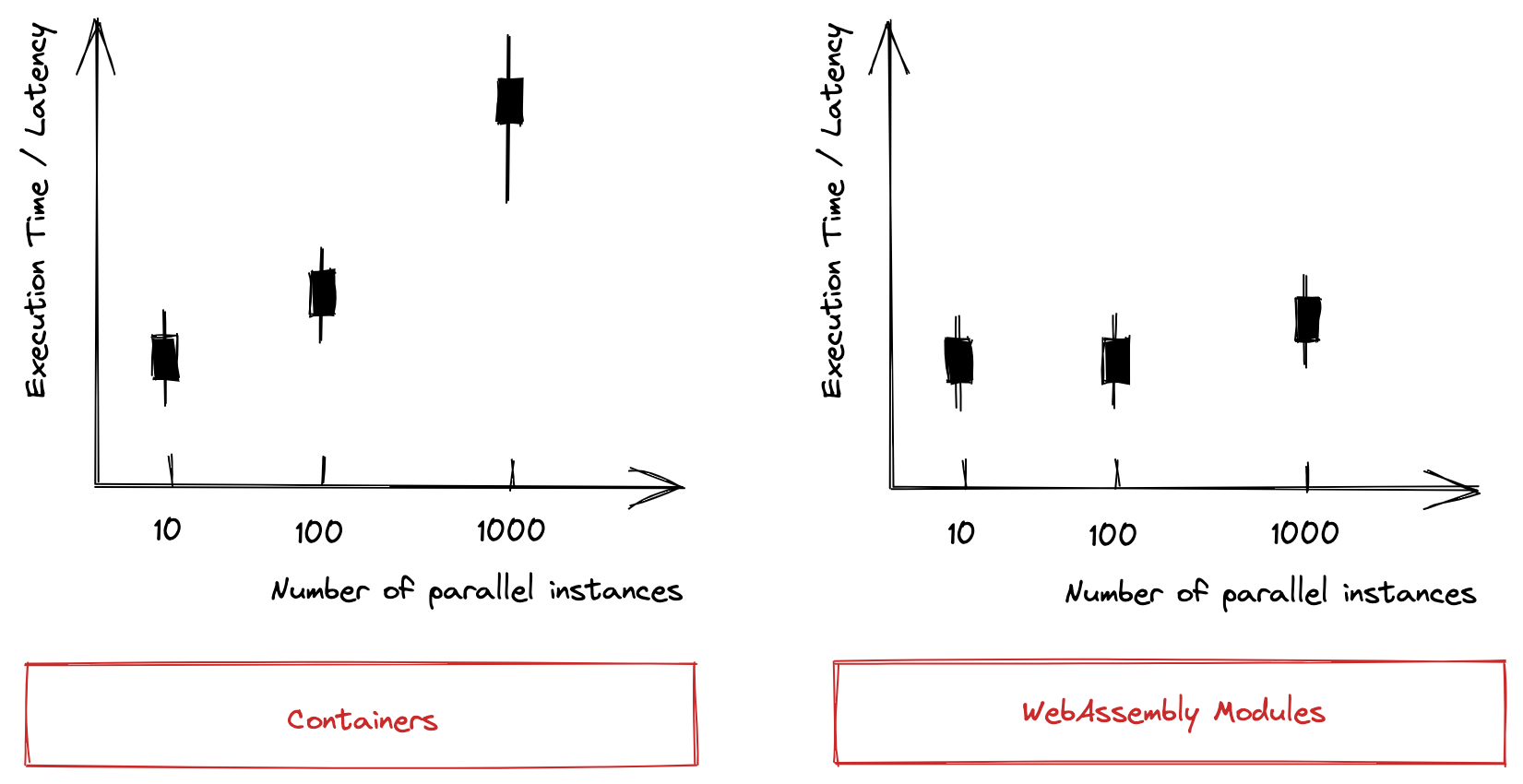

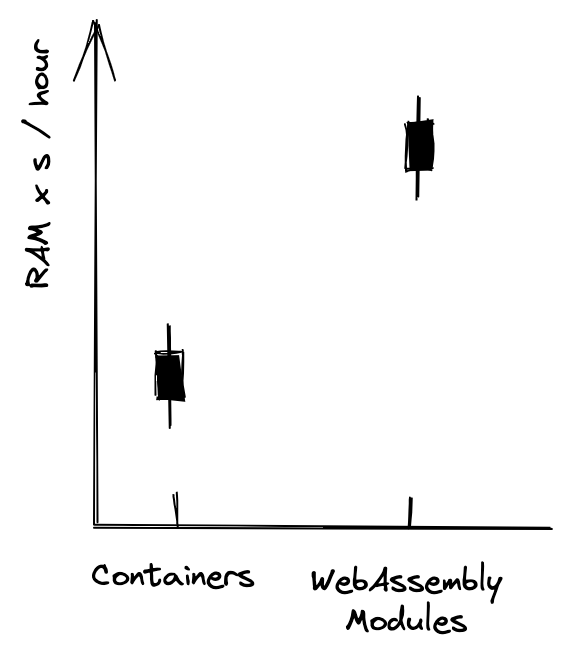

The benchmarks will focus on throughput, latency, and resource utilization. The graphs shown below are only indicative of the planned approach and my assumptions of the system behavior; the benchmarks have not been carried out, yet.

System Throughput

The system throughput is defined as the sum of successfully executed workflow tasks in one minute. For containers, I assume that due to container management overhead (e.g., de-/provisioning of namespaces and network interfaces), I will see an upper bound. As much of the management overhead does not apply to Wasm, I expect the system throughput to scale equally with the number of parallel instances.

Latency

Latency is defined as the time until a workflow task’s code is executed in the cluster. I suppose that latency will have a similar behavior compared to system throughput. Compared to system throughput, however, latency has a finer scope (workflow task vs. workflow) so that outliers can be identified more easily.

Resource Utilization

I define resource utilization as the ratio between billable (main) memory time and total memory time within one hour. Total memory time is one hour multiplied by the total amount of memory in the cluster. Billable memory time is the time that user code is actually running with all management overhead removed. Total billable memory time is the sum of all billable memory time.

“Billable Memory Time” is used by all cloud hyperscalers for FaaS billing. Thus, it can measure how much sense a transition to WebAssembly modules makes from an economic perspective.

I expect the total billable memory time to be higher for WebAssembly-backed workflows. This is – again – due to the reduced management overhead.

In this post I described which design decisions are still pending, what my options are, and how I will evaluate my success in the end. In the next post I will go into more detail about the integration of the Wasm runtime with Argo Workflows and Kubernetes.